This week, another warning flag was raised concerning the rapid progress of advanced artificial intelligence technology. This time, it took the form of an open letter authored by current and former employees at some of the world’s top AI labs — and cosigned by leading experts including two of the three “godfathers of AI.”

This so-called “right to warn” letter had a clear set of demands for AI labs. Given the immense potential and risks posed by the technology, the signatories called upon labs to:

- Not coerce ex-employees into non-disparagement agreements to avoid criticism;

- Facilitate an anonymous process for reporting risks to third parties;

- Support a culture of open criticism, allowing current and former employees to voice concerns;

- Not retaliate against current and former employees who do blow the whistle.

The need for this letter is, perhaps, emphasized by the fact that many of the employees who signed it only felt comfortable doing so anonymously. To understand why these demands are being made, and why some signatories are unable to even share their name publicly, we have to recap some of the recent news surrounding OpenAI.

Buying their silence?

On May 18, journalist Kelsey Piper released an article on Vox entitled “ChatGPT can talk, but OpenAI employees sure can’t.”

The article describes the many recent (and concerning) departures from OpenAI that predominately involved staff focused on safety and alignment, but who were often saying little about their decision to leave. Piper writes:

It turns out there’s a very clear reason for that. I have seen the extremely restrictive off-boarding agreement that contains nondisclosure and non-disparagement provisions former OpenAI employees are subject to. It forbids them, for the rest of their lives, from criticizing their former employer. Even acknowledging that the NDA exists is a violation of it.

Kelsey Piper, Vox

As the article reveals, it turned out that OpenAI has been surprising departing employees with an implicit threat — that they might lose all their equity in the company unless they promise to never publicly criticize the company again. To even mention that this agreement existed would constitute a violation of it.

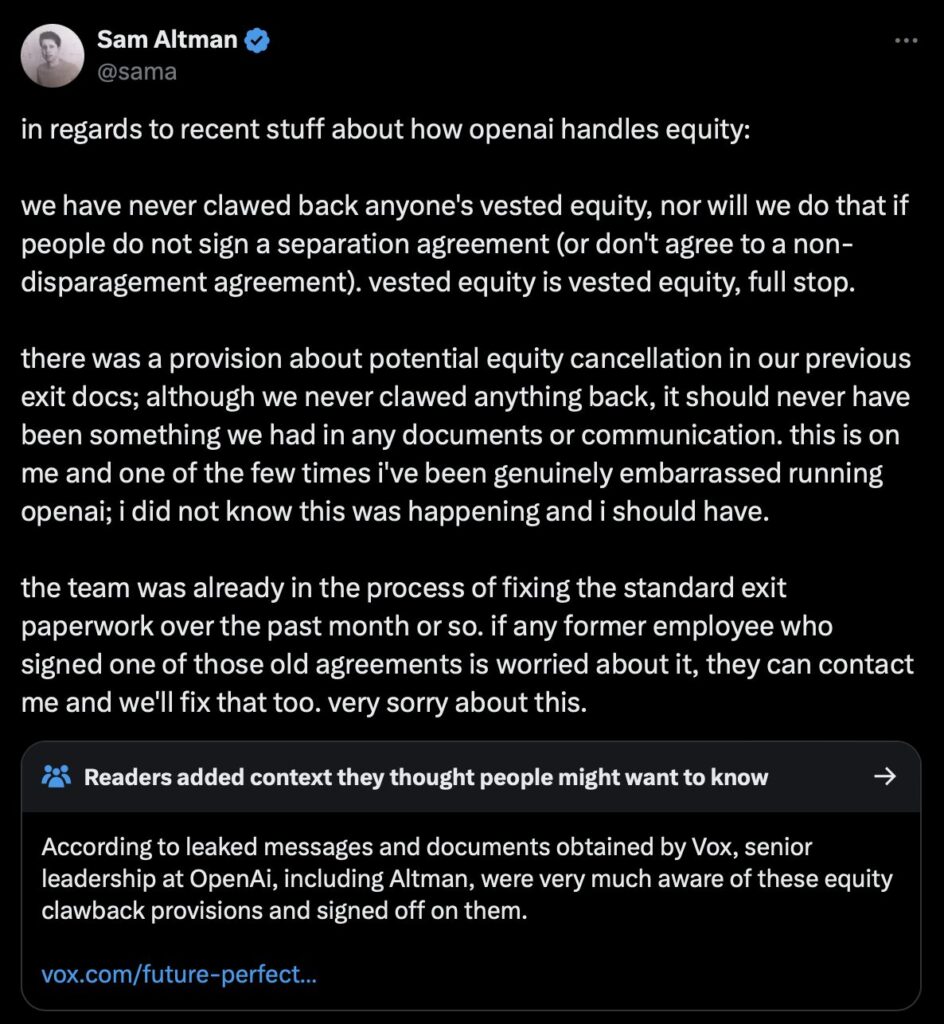

After Vox’s reporting, Sam Altman issued an apology on X (formerly Twitter), writing that the equity cancellation provision in former employees’ resignation agreements “should never have been something we had in any documents or communication” and that he “did not know this was happening and should have.”

However, as a community note on the tweet points out, the odds are that Altman did know about this. Later reporting from Kelsey Piper revealed company documents with signatures from Altman that state in plain language that employees may lose equity if they don’t sign a release of claims agreement.

The “Right to Warn” Letter

It is in this context, following the revelation of attempts from OpenAI to effectively buy the silence of former employees, that the “right to warn” letter was released. In the words of the authors of the letter:

So long as there is no effective government oversight of these corporations, current and former employees are among the few people who can hold them accountable to the public. Yet broad confidentiality agreements block us from voicing our concerns, except to the very companies that may be failing to address these issues. Ordinary whistleblower protections are insufficient because they focus on illegal activity, whereas many of the risks we are concerned about are not yet regulated. Some of us reasonably fear various forms of retaliation, given the history of such cases across the industry. We are not the first to encounter or speak about these issues.

RightToWarn.ai

The letter was covered widely in the media, including a front-page New York Times article from journalist Kevin Roose. In it, he writes, “A group of OpenAI insiders is blowing the whistle on what they say is a culture of recklessness and secrecy at the San Francisco artificial intelligence company.”

A spokesperson from OpenAI responded to the letter via comment to the New York Times, but apparently refused to implement any of the common-sense demands from the letter. Instead, OpenAI opted for PR-speak, claiming (without clear basis) that OpenAI is proud of creating “the most capable and safest AI systems.” Google, another company implicated by the letter, refused to comment at all.

We recommend reading the letter yourself, and seeing what you make of the demands. In our view, it’s common-sense protections that are the bare minimum for responsible development of such an important technology.

How can the public support this issue?

Tech companies are often resistant to change, up until the public makes their voices heard — at which point they’ll sheepishly correct their behavior. Therefore, we think one of the most important things you can do to support the letter is to share it on social media, and tell top AI labs that you are aware of, and concerned with, their lacking approach to whistleblower protections.

Click the buttons below to share the “right to warn” letter on social media.

The demands of the letter also resonate with a letter released by The Midas Project and allies the previous week, concerning the lack of accountability faced by OpenAI in particular. To sign our open letter, press the button below: