If there’s one thing to know about the current state of AI development, it’s this:

Things are moving faster than anyone anticipated.

For a long time, there was uncertainty about whether the set of methods known as machine learning would ever be able to achieve human-level general intelligence, let alone surpass it.

In the late twentieth century, there were two so-called “AI winters” in which pessimism about progress in the field led to stalled progress in AI investment and research.

But in recent years, things have changed dramatically.

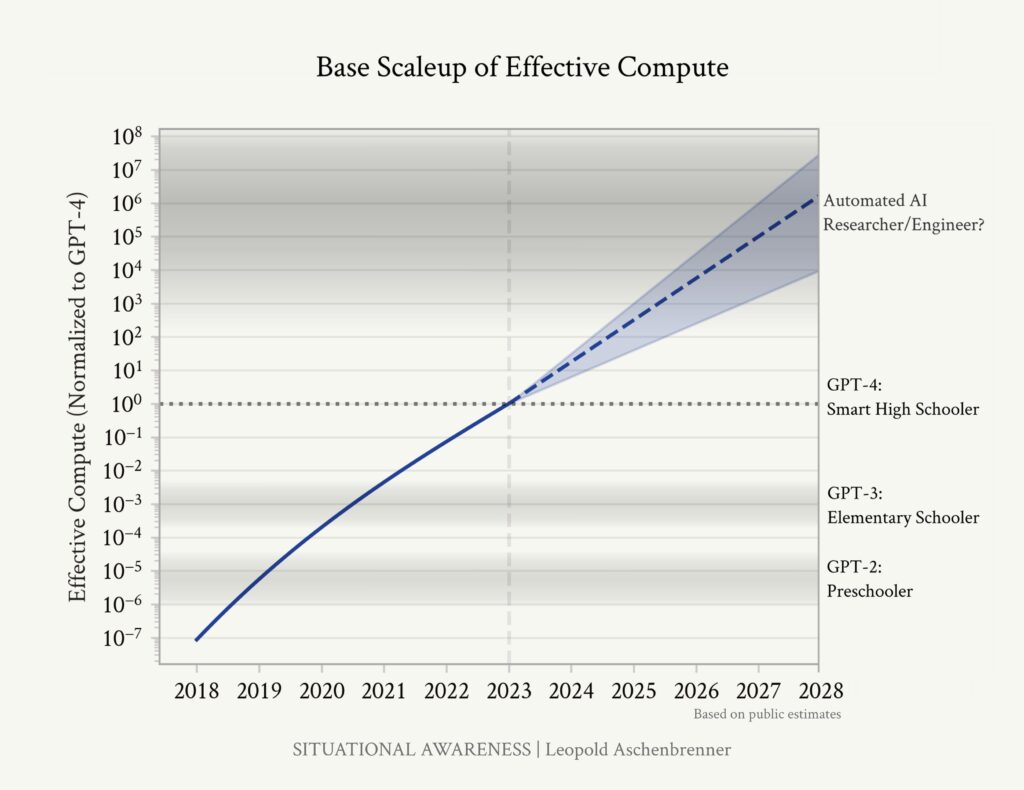

If you had to identify a start date for the current AI boom, you might look at the publication of AlexNet in 2012. This was one of the first indications of what would become known as “the bitter conclusion” — that progress in AI was fundamentally dependent not on clever algorithmic design or breakthroughs in human research, but instead on throwing larger and larger amounts of data and computational resources at the problem.

Since then, progress has been incredibly rapid. Five years ago, nobody could have predicted how far the current paradigm would take us. It’s beginning to look more and more likely that we will see human-level artificial intelligence, or even superintelligence, within the next two decades.

But how soon exactly? Nobody knows. This is unlike any technological development in human history, and so there is a limit to what we can learn from looking at past technologies.

Still, forecasts have tried to put numbers to the question. One of the best ways to get a high-level picture of the situation is to look to Metaculus, a website where forecasters with different views compete to make accurate predictions about the future, and in doing so, form an aggregate prediction.

Metaculus currently predicts a 50% chance that human-level artificial intelligence, or “AGI,” has been developed by the early 2030s.

This aggregate prediction aligns with what many leading experts in the field have said about their personal expectations:

- Sam Altman, CEO of OpenAI: “I expect that by the end of this decade, and possibly somewhat sooner than that, we will have quite capable systems that we look at and say, ‘Wow, that’s really remarkable.’” (source)

- Geoffrey Hinton, Turing Award winner and so-called godfather of AI: “I think in five years’ time [artificial intelligence] may well be able to reason better than us. ” (source)

- Demis Hassabis, CEO of Google Deepmind: “I don’t see any reason why that progress is going to slow down. I think it may even accelerate. So I think we could be just a few years, maybe within a decade, away [from AGI].” (source)

- Jensen Huang, CEO of Nvidia: “If I gave an AI … every single test that you can possibly imagine, you make that list of tests and put it in front of the computer science industry, and I’m guessing in five years time, we’ll do well on every single one.” (source)

- Paul Christiano, head of AI safety at US AI Safety Institute: “I think I sympathize more with people who are like: ‘How could it not happen in 3 years?’ than with people who are like: ‘No way it’s going to happen in eight years.’” (source)

- Leopold Aschenbrenner, ex-researcher at OpenAI: “It is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.” (source)

Nobody is saying that this is necessarily how the future will play out. There are dozens of theorized reasons that we may see AI stall again — from running out of high-quality data to a market crash that stifles innovation and causes a third AI winter.

The important thing to realize is that we are plausibly within striking distance of AI systems so powerful that the world will begin to look unrecognizable.

With other technologies, we don’t wait for certainty to act. Even a small chance of an airplane crashing, for example, is enough for our society to justify strict safety measures to mitigate the potential downsides.

AI should be no different. We need to do more, and soon, to ensure that we can avoid the risks posed by advanced artificial intelligence.